8-Apache Hive函数高阶应用、性能调优

hadoop离线day08-Apache Hive函数高阶应用、性能调优

今日课程学习目标

掌握explode函数、侧视图使用

掌握行列转换、json数据处理

掌握窗口函数的使用

知道Hive数据压缩、文件存储格式

掌握Hive通用调优(重要的见下述大纲)

今日课程内容大纲

#Hive函数高阶应用(面试笔试、开发高频区域)

explode(UDTF)函数功能

lateral view 侧视图

行列转换

json格式数据解析

窗口函数(Window function)开窗函数

分组TopN、级联累加问题、连续登陆

#Hive的性能调优

hive的数据文件格式 数据压缩

行式存储 列式存储(ORC parquet)

hive通用调优

*join优化

*group by数据倾斜优化

*task并行度问题

其他通用调优

知识点01:Apache Hive--explode函数的使用与限制(UDTF表生成函数)

-

explode属于UDTF函数,表生成函数,输入一行数据输出多行数据。

-

功能:

explode() takes in an array (or a map) as an input and outputs the elements of the array (map) as separate rows. --explode接收map array类型的参数 把map或者array的元素输出,一行一个元素。 explode(array(11,22,33)) 11 22 33 select explode(`array`(11,22,33,44,55)); select explode(`map`("id",10086,"name","allen","age",18)); -

栗子

将NBA总冠军球队数据使用explode进行拆分,并且根据夺冠年份进行倒序排序。

--step1:建表 create table the_nba_championship( team_name string, champion_year array<string> ) row format delimited fields terminated by ',' collection items terminated by '|'; --step2:加载数据文件到表中 load data local inpath '/root/hivedata/The_NBA_Championship.txt' into table the_nba_championship; --step3:验证 select * from the_nba_championship; --step4:使用explode函数对champion_year进行拆分 俗称炸开 select explode(champion_year) from the_nba_championship; --想法是正确的 sql执行确实错误的 select team_name,explode(champion_year) from the_nba_championship; --错误信息 UDTF’s are not supported outside the SELECT clause, nor nested in expressions UDTF 在 SELECT 子句之外不受支持,也不在表达式中嵌套??? -

如果数据不是map或者array 如何使用explode函数呢?

想法设法使用split subsrt regex_replace等函数组合使用 把数据变成array或者map.

create table the_nba_championship_str( team_name string, champion_year string ) row format delimited fields terminated by ','; load data local inpath '/root/hivedata/The_NBA_Championship.txt' into table the_nba_championship_str;

知识点02:Apache Hive--lateral view侧视图的使用

侧视图的原理是将UDTF的结果构建成一个类似于视图的表,然后将原表中的每一行和UDTF函数输出的每一行进行连接,生成一张新的虚拟表

-

背景

-

UDTF函数生成的结果可以当成一张虚拟的表,但是无法和原始表进行组合查询

select name,explode(location) from test_message; --这个sql就是错误的 相当于执行组合查询 -

从理论层面推导,对两份数据进行join就可以了

-

但是,hive专门推出了lateral view侧视图的语,满足上述需要。

-

-

功能:把UDTF函数生成的结果和原始表进行关联,便于用户在select时间组合查询、 lateral view是UDTf的好基友好搭档,实际中经常配合使用。

-

语法:

--lateral view侧视图基本语法如下 select …… from tabelA lateral view UDTF(xxx) 别名 as col1,col2,col3……; --针对上述NBA冠军球队年份排名案例,使用explode函数+lateral view侧视图,可以完美解决 select a.team_name ,b.year from the_nba_championship a lateral view explode(champion_year) b as year; --根据年份倒序排序 select a.team_name ,b.year from the_nba_championship a lateral view explode(champion_year) b as year order by b.year desc; --统计每个球队获取总冠军的次数 并且根据倒序排序 select a.team_name ,count(*) as nums from the_nba_championship a lateral view explode(champion_year) b as year group by a.team_name order by nums desc;

知识点03:Apache Hive--行列转换--多行转单列(collect_list、concat_ws)

-

数据收集函数

collect_set --把多行数据收集为一行 返回set集合 去重无序 collect_list --把多行数据收集为一行 返回list集合 不去重有序 -

字符串拼接函数

concat --直接拼接字符串 concat_ws --指定分隔符拼接 select concat("it","cast","And","heima"); select concat("it","cast","And",null); select concat_ws("-","itcast","And","heima"); select concat_ws("-","itcast","And",null); -

栗子

--原表 +----------------+----------------+----------------+--+ | row2col2.col1 | row2col2.col2 | row2col2.col3 | +----------------+----------------+----------------+--+ | a | b | 1 | | a | b | 2 | | a | b | 3 | | c | d | 4 | | c | d | 5 | | c | d | 6 | +----------------+----------------+----------------+--+ --目标表 +-------+-------+--------+--+ | col1 | col2 | col3 | +-------+-------+--------+--+ | a | b | 1-2-3 | | c | d | 4-5-6 | +-------+-------+--------+--+ --建表 create table row2col2( col1 string, col2 string, col3 int )row format delimited fields terminated by '\t'; --加载数据到表中 load data local inpath '/root/hivedata/r2c2.txt' into table row2col2; select * from row2col2; --最终SQL实现 select col1, col2, concat_ws(',', collect_list(cast(col3 as string))) as col3 from row2col2 group by col1, col2;

知识点04:Apache Hive--行列转换--单列转多行(explode、lateral view)

-

技术原理: explode+lateral view

-

例子

--原表 +-------+-------+--------+--+ | col1 | col2 | col3 | +-------+-------+--------+--+ | a | b | 1,2,3 | | c | d | 4,5,6 | +-------+-------+--------+--+ --目标表 +----------------+----------------+----------------+--+ | row2col2.col1 | row2col2.col2 | row2col2.col3 | +----------------+----------------+----------------+--+ | a | b | 1 | | a | b | 2 | | a | b | 3 | | c | d | 4 | | c | d | 5 | | c | d | 6 | +----------------+----------------+----------------+--+ --创建表 create table col2row2( col1 string, col2 string, col3 string )row format delimited fields terminated by '\t'; --加载数据 load data local inpath '/root/hivedata/c2r2.txt' into table col2row2; select * from col2row2; select explode(split(col3,',')) from col2row2; --SQL最终实现 select col1, col2, lv.col3 as col3 from col2row2 lateral view explode(split(col3, ',')) lv as col3;

知识点05:Apache Hive--json格式数据处理

-

在hive中,没有json类的存在,一般使用string类型来修饰,叫做json字符串,简称json串。

-

在hive中,处理json数据的两种方式

-

hive内置了两个用于解析json的函数

json_tuple --是UDTF 表生成函数 输入一行,输出多行 一次提取读个值 可以单独使用 也可以配合lateral view侧视图使用 get_json_object --是UDF普通函数,输入一行 输出一行 一次只能提取一个值 多次提取多次使用 -

使用==JsonSerDe 类解析==,在加载json数据到表中的时候完成解析动作

-

-

栗子

--创建表 create table tb_json_test1 ( json string ); --加载数据 load data local inpath '/root/hivedata/device.json' into table tb_json_test1; select * from tb_json_test1; -- get_json_object UDF函数 最大弊端是一次只能解析提取一个字段 -- ⭐⭐⭐方法1:一个字段一个字段提取 select --获取设备名称 get_json_object(json,"$.device") as device, --获取设备类型 get_json_object(json,"$.deviceType") as deviceType, --获取设备信号强度 get_json_object(json,"$.signal") as signal, --获取时间 get_json_object(json,"$.time") as stime from tb_json_test1; -- json_tuple 这是一个UDTF函数 可以一次解析提取多个字段 -- ⭐⭐⭐方法2 -- 这是udtf,一进多出,这里是一进一出但是也算是一进多出,这里的多就是1 -- 单独使用 解析所有字段 select json_tuple(json,"device","deviceType","signal","time") as (device,deviceType,signal,stime) from tb_json_test1; -- ⭐⭐⭐方法3:搭配侧视图使用 select json,device,deviceType,signal,stime from tb_json_test1 lateral view json_tuple(json,"device","deviceType","signal","time") b as device,deviceType,signal,stime; --方式2: ⭐⭐⭐方法4:使用JsonSerDe类在建表的时候解析数据 -- 建表的时候直接使用JsonSerDe解析 create table tb_json_test2 ( device string, deviceType string, signal double, `time` string ) ROW FORMAT SERDE 'org.apache.hive.hcatalog.data.JsonSerDe' STORED AS TEXTFILE; load data local inpath '/root/hivedata/device.json' into table tb_json_test2; select * from tb_json_test2;

具体哪个方法好,看具体业务场景

知识点06:Apache Hive--窗口函数--快速理解与语法规则

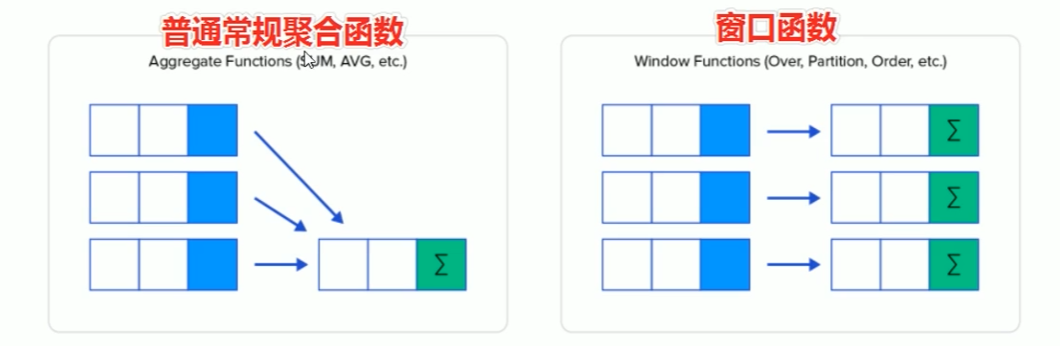

1、快速理解窗口函数功能

-

window function 窗口函数、开窗函数、olap分析函数。

-

窗口:可以理解为操作数据的范围,窗口有大有小,本窗口中操作的数据有多有少。

-

可以简单地解释为类似于聚合函数的计算函数,但是通过GROUP BY子句组合的常规聚合会隐藏正在聚合的各个行,最终输出一行;而窗口函数聚合后还可以访问当中的各个行,并且可以将这些行中的某些属性添加到结果集中。

--建表加载数据

CREATE TABLE employee(

id int,

name string,

deg string,

salary int,

dept string

) row format delimited

fields terminated by ',';

load data local inpath '/root/hivedata/employee.txt' into table employee;

select * from employee;

----sum+group by普通常规聚合操作------------

select dept,sum(salary) as total from employee group by dept;

select id,dept,sum(salary) as total from employee group by dept; --添加id至结果,错误sql

+-------+---------+

| dept | total |

+-------+---------+

| AC | 60000 |

| TP | 120000 |

+-------+---------+

----sum+窗口函数聚合操作------------

select id,name,deg,salary,dept,sum(salary) over(partition by dept) as total from employee;

+-------+-----------+----------+---------+-------+---------+

| id | name | deg | salary | dept | total |

+-------+-----------+----------+---------+-------+---------+

| 1204 | prasanth | dev | 30000 | AC | 60000 |

| 1203 | khalil | dev | 30000 | AC | 60000 |

| 1206 | kranthi | admin | 20000 | TP | 120000 |

| 1202 | manisha | cto | 50000 | TP | 120000 |

| 1201 | gopal | manager | 50000 | TP | 120000 |

+-------+-----------+----------+---------+-------+---------+

2、窗口函数语法规则

具有OVER语句的函数叫做窗口函数。

Function OVER ([PARTITION BY <...>] [ORDER BY <....>] [<window_expression>])

--1、Function可以是下面分类中的任意一个

--聚合函数:比如sum、max、avg、max、min等

--排序函数:比如rank、row_number等

--分析函数:比如lead、lag、first_value等

--2、OVER 窗口函数语法关键字与标识

--3、PARTITION BY <...>功能类似于group by,用于指定分组,相同的分为一组。如果没有指定PARTITION BY,那么整张表的所有行就是一组;

--4、ORDER BY <....> 用于指定每个分组内的数据排序规则 ,默认是升序ASC,支持ASC、DESC;

--5、window_expression window表达式,也叫window子句,用于指定每个窗口中操作的数据范围

⭐⭐⭐

有row between 3-5 表示从3到5行

还有range between 3-5 表示值从3到5

- 建表加载数据 后续练习使用

---建表并且加载数据

create table website_pv_info(

cookieid string,

createtime string, --day

pv int

) row format delimited

fields terminated by ',';

create table website_url_info (

cookieid string,

createtime string, --访问时间

url string --访问页面

) row format delimited

fields terminated by ',';

load data local inpath '/root/hivedata/website_pv_info.txt' into table website_pv_info;

load data local inpath '/root/hivedata/website_url_info.txt' into table website_url_info;

select * from website_pv_info;

select * from website_url_info;

知识点07:Apache Hive--窗口函数--聚合函数

-

语法

sum|max|min|avg OVER ([PARTITION BY <...>] [ORDER BY <....>] [<window_expression>]) -

重点:有PARTITION BY 没有PARTITION BY的区别;有ORDER BY没有ORDER BY的区别。

- 有没有partition by 影响的是全局聚合 还是分组之后 每个组内聚合。

- 有没有==order by的区别==:

- 没有order by,默认是rows between,首行到最后行,这里的"行"是物理上的行;

- 有order by,默认是range between,首行到当前行,这里的"行"是逻辑上的行,由字段的值的区间range来划分范围。

-

栗子

--1、求出每个用户总pv数 sum+group by普通常规聚合操作 select cookieid,sum(pv) as total_pv from website_pv_info group by cookieid; +-----------+-----------+ | cookieid | total_pv | +-----------+-----------+ | cookie1 | 26 | | cookie2 | 35 | +-----------+-----------+ --2、sum+窗口函数 需要注意:这里都没有使用window子句指定范围,那么默认值是rows还是range呢??? --当没有order by也没有window子句的时候,默认是rows between,从第一行到最后一行,即分组内的所有行聚合。 --sum(...) over( ) select cookieid,createtime,pv, sum(pv) over() as total_pv from website_pv_info; --sum(...) over( partition by... ) select cookieid,createtime,pv, sum(pv) over(partition by cookieid) as total_pv from website_pv_info; --当有order by但是缺失window子句的时候,默认是range between,为第一行的值到当前行的值构成的区间 --sum(...) over( partition by... order by ... ) select cookieid,createtime,pv, sum(pv) over(partition by cookieid order by createtime) as current_total_pv from website_pv_info; --上述是根据creattime排序,因此range区间是由createtime的字段值来划分,第一行到当前行。 --下面是根据pv排序,因此range区间是由pv的字段值来划分,第一行到当前行。 --下面两个sql等价 select cookieid,createtime,pv, sum(pv) over(partition by cookieid order by pv) as current_total_pv from website_pv_info; --这属于有order by没有 window子句 默认range select cookieid,createtime,pv, sum(pv) over(partition by cookieid order by pv range between unbounded preceding and current row) as current_total_pv from website_pv_info; --这是手动指定根据rows来划分范围 select cookieid,createtime,pv, sum(pv) over(partition by cookieid order by pv rows between unbounded preceding and current row ) as current_total_pv from website_pv_info;

⭐⭐⭐HIVE SQL 聚合函数与 rows between、 range between详解

知识点08:Apache Hive--窗口函数--window子句

直译叫做window表达式 ,通俗叫法称之为window子句。

-

功能:控制窗口操作的范围。

-

语法

unbounded 无边界

preceding 往前

following 往后

unbounded preceding 往前所有行,即初始行

n preceding 往前n行

unbounded following 往后所有行,即末尾行

n following 往后n行

current row 当前行

语法

(ROWS | RANGE) BETWEEN (UNBOUNDED | [num]) PRECEDING AND ([num] PRECEDING | CURRENT ROW | (UNBOUNDED | [num]) FOLLOWING)

(ROWS | RANGE) BETWEEN CURRENT ROW AND (CURRENT ROW | (UNBOUNDED | [num]) FOLLOWING)

(ROWS | RANGE) BETWEEN [num] FOLLOWING AND (UNBOUNDED | [num]) FOLLOWING

-

栗子

这里以rows between为例来讲解窗口范围的划分,rows表示物理层面上的行,跟字段值没关系。

--默认从第一行到当前行 select cookieid,createtime,pv, sum(pv) over(partition by cookieid order by createtime) as pv1 from website_pv_info; --第一行到当前行 等效于rows between不写 默认就是第一行到当前行 select cookieid,createtime,pv, sum(pv) over(partition by cookieid order by createtime rows between unbounded preceding and current row) as pv2 from website_pv_info; --向前3行至当前行 select cookieid,createtime,pv, sum(pv) over(partition by cookieid order by createtime rows between 3 preceding and current row) as pv4 from website_pv_info; --向前3行 向后1行 select cookieid,createtime,pv, sum(pv) over(partition by cookieid order by createtime rows between 3 preceding and 1 following) as pv5 from website_pv_info; --当前行至最后一行 select cookieid,createtime,pv, sum(pv) over(partition by cookieid order by createtime rows between current row and unbounded following) as pv6 from website_pv_info; --第一行到最后一行 也就是分组内的所有行 select cookieid,createtime,pv, sum(pv) over(partition by cookieid order by createtime rows between unbounded preceding and unbounded following) as pv6 from website_pv_info;

知识点09:Apache Hive--窗口函数--排序函数(row_number等)

学的第二类窗口函数

-

功能:主要对数据分组排序之后,组内顺序标号。

- 不能用row 和 range between了

-

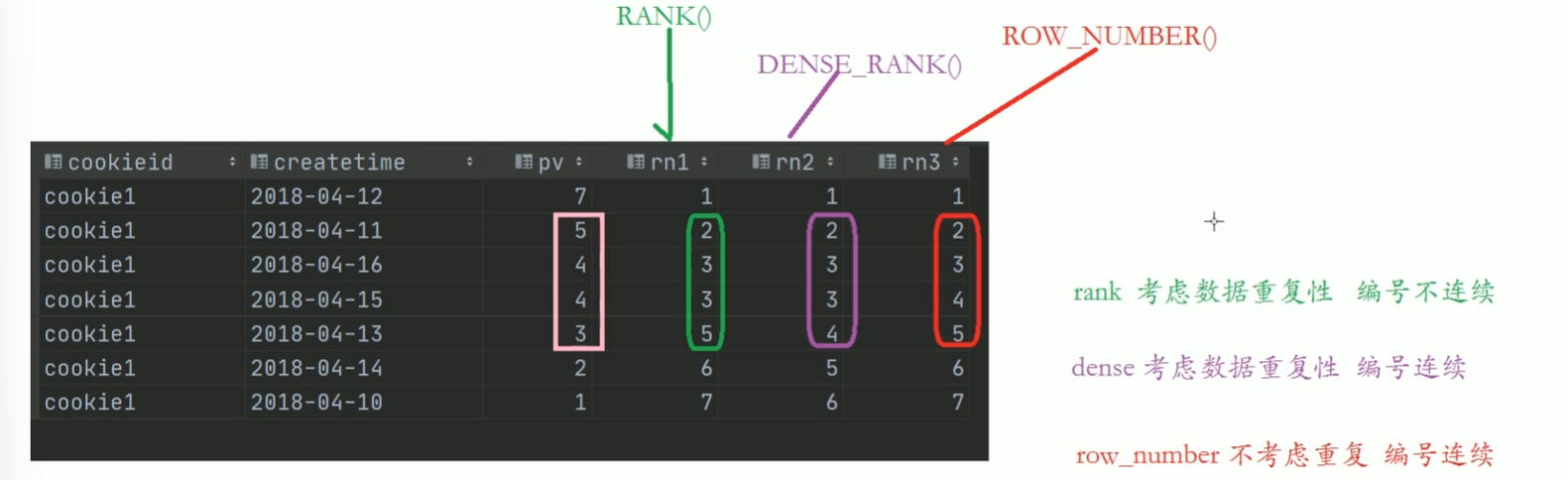

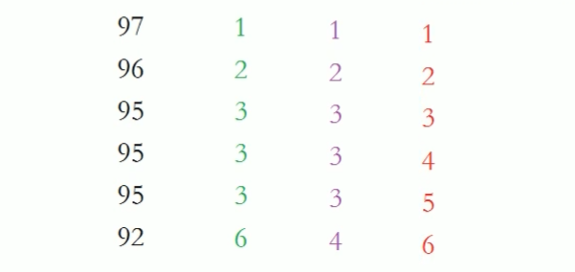

核心函数:row_number、rank、dense_rank

-

适合场景:分组TopN业务分析(注意哦 不是全局topN)

- 找出我们班男女中最有钱的前两个

- 找出我们班成绩最高的3个人

-

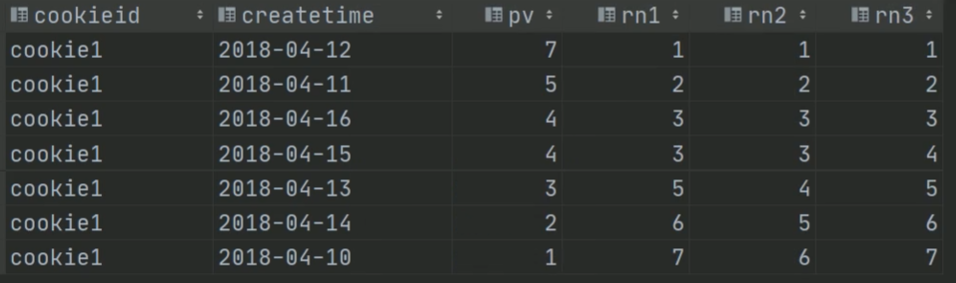

栗子

SELECT cookieid, createtime, pv, RANK() OVER(PARTITION BY cookieid ORDER BY pv desc) AS rn1, DENSE_RANK() OVER(PARTITION BY cookieid ORDER BY pv desc) AS rn2, ROW_NUMBER() OVER(PARTITION BY cookieid ORDER BY pv DESC) AS rn3 FROM website_pv_info;

解释:

再来个例子:

```sql

--需求:找出每个用户访问pv最多的Top3 重复并列的不考虑

SELECT * from

(SELECT

cookieid,

createtime,

pv,

ROW_NUMBER() OVER(PARTITION BY cookieid ORDER BY pv DESC) AS seq

FROM website_pv_info) tmp where tmp.seq <4;

-

ntile函数 row_number的远房表哥

-

功能:将分组排序之后的数据分成指定的若干个部分(若干个桶)

-

规则:尽量平均分配 ,优先满足最小的桶,彼此最多不相差1个。

- 第一桶最多,例如7个分三桶就分成 3 2 2

- 就能去前三分之一就是前三个,取中间三分之一就是中间两个

-

栗子

--把每个分组内的数据分为3桶 SELECT cookieid, createtime, pv, NTILE(3) OVER(PARTITION BY cookieid ORDER BY createtime) AS rn2 FROM website_pv_info ORDER BY cookieid,createtime; --需求:统计每个用户pv数最多的前3分之1天。 --理解:将数据根据cookieid分 根据pv倒序排序 排序之后分为3个部分 取第一部分 SELECT * from (SELECT cookieid, createtime, pv, NTILE(3) OVER(PARTITION BY cookieid ORDER BY pv DESC) AS rn FROM website_pv_info) tmp where rn =1;

-

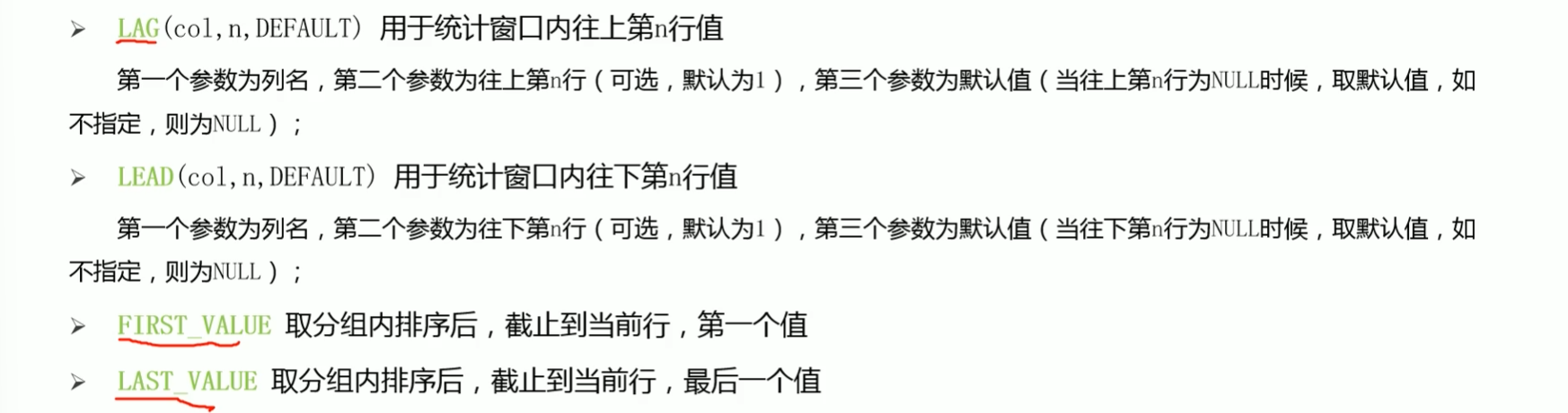

知识点10:Apache Hive--窗口函数--lag、lead函数

窗口分析函数

--LAG 用于统计窗口内往上第n行值

SELECT cookieid,

createtime,

url,

ROW_NUMBER() OVER(PARTITION BY cookieid ORDER BY createtime) AS rn,

LAG(createtime,1,'1970-01-01 00:00:00') OVER(PARTITION BY cookieid ORDER BY createtime) AS last_1_time,

LAG(createtime,2) OVER(PARTITION BY cookieid ORDER BY createtime) AS last_2_time

FROM website_url_info;

--LEAD 用于统计窗口内往下第n行值

SELECT cookieid,

createtime,

url,

ROW_NUMBER() OVER(PARTITION BY cookieid ORDER BY createtime) AS rn,

LEAD(createtime,1,'1970-01-01 00:00:00') OVER(PARTITION BY cookieid ORDER BY createtime) AS next_1_time,

LEAD(createtime,2) OVER(PARTITION BY cookieid ORDER BY createtime) AS next_2_time

FROM website_url_info;

--FIRST_VALUE 取分组内排序后,截止到当前行,第一个值

SELECT cookieid,

createtime,

url,

ROW_NUMBER() OVER(PARTITION BY cookieid ORDER BY createtime) AS rn,

FIRST_VALUE(url) OVER(PARTITION BY cookieid ORDER BY createtime) AS first1

FROM website_url_info;

--LAST_VALUE 取分组内排序后,截止到当前行,最后一个值

SELECT cookieid,

createtime,

url,

ROW_NUMBER() OVER(PARTITION BY cookieid ORDER BY createtime) AS rn,

LAST_VALUE(url) OVER(PARTITION BY cookieid ORDER BY createtime) AS last1

FROM website_url_info;

⭐czc的窗口函数总结

- 关于

order by- 窗口函数中的

ORDER BY(核心作用:定义窗口内的计算顺序) - 最后的全局

ORDER BY(核心作用:定义结果集的输出顺序)

- 窗口函数中的

知识点11:Apache Hive--文件存储格式(text、ORC、parquet)

-

列式存储、行式存储

- 数据最终在文件中底层以什么样的形成保存。

-

行列存储的优缺点:

- 行式存储:优点:便于插入、更新

- 列式存储:优点:便于查询

- 因为数仓主要工作是对数据进行分析,所以使用大多数时候列式存储,但是hive默认是行式存储

-

Hive中表的数据存储格式,不是只支持text文本格式,还支持其他很多格式。

-

hive表的文件格式是如何指定的呢? 建表的时候通过STORED AS 语法指定。如果没有指定默认都是textfile。

-

Hive中主流的几种文件格式。

-

textfile 文件格式 默认的

-

ORC、Parquet(facebook的) 列式存储格式。

- Parquet效率不如ORC,但是比文本格式强一些

都是列式存储格式,底层是以二进制形式存储。数据存储效率极高,对于查询贼方便。 二进制意味着肉眼无法直接解析,hive可以自解析。

-

!Pasted image 20250410161300.png

-

栗子

分别使用3种不同格式存储数据,去HDFS上查看底层文件存储空间的差异。

注意:load是纯复制操作,对于数据的文本文件,必须要先select查询出来再insert,下面例子2后面写了

--1、创建表,存储数据格式为TEXTFILE create table log_text ( track_time string, url string, session_id string, referer string, ip string, end_user_id string, city_id string ) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' STORED AS TEXTFILE; --如果不写stored as textfile 默认就是textfile --加载数据 load data local inpath '/root/hivedata/log.data' into table log_text;

⭐加载后文件大小是'18MB'

--2、创建表,存储数据格式为ORC

create table log_orc(

track_time string,

url string,

session_id string,

referer string,

ip string,

end_user_id string,

city_id string

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS orc ;

--向表中插入数据 思考为什么不能使用load命令加载? 因为load是纯复制移动操作 不会调整文件格式。

insert into table log_orc select * from log_text;

⭐加载后文件大小是'2.78MB'

--3、创建表,存储数据格式为parquet

create table log_parquet(

track_time string,

url string,

session_id string,

referer string,

ip string,

end_user_id string,

city_id string

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

STORED AS PARQUET ;

--向表中插入数据

insert into table log_parquet select * fro m log_text ;

⭐加载进来后文件大小是'12.9MB'

```

知识点12:Apache Hive--数据压缩和文件格式搭配(ORC+snappy)

-

Hive的默认执行引擎是MapReduce,因此通常所说的Hive压缩指的是MapReduce的压缩。

-

压缩是指通过算法对数据进行重新编排,降低存储空间。无损压缩。

-

MapReduce可以在两个阶段进行数据压缩

- map的输出

- 减少shuffle的数据量 提高shuffle时网络IO的效率

- reduce的输出

- 减少输出文件的大小 降低磁盘的存储空间

- map的输出

-

压缩的弊端

- 浪费时间

- 消耗CPU、内存

- 某些优秀的压缩算法需要钱

-

压缩的算法(推荐使用snappy)

- ORC存储会默认使用ZLIB压缩

Snappy org.apache.hadoop.io.compress.SnappyCodec -

Hive中压缩的设置:注意 本质还是指的是MapReduce的压缩

--设置Hive的中间压缩 也就是map的输出压缩 1)开启 hive 中间传输数据压缩功能 set hive.exec.compress.intermediate=true; 2)开启 mapreduce 中 map 输出压缩功能 set mapreduce.map.output.compress=true; 3)设置 mapreduce 中 map 输出数据的压缩方式 set mapreduce.map.output.compress.codec = org.apache.hadoop.io.compress.SnappyCodec; --设置Hive的最终输出压缩,也就是Reduce输出压缩 1)开启 hive 最终输出数据压缩功能 set hive.exec.compress.output=true; 2)开启 mapreduce 最终输出数据压缩 set mapreduce.output.fileoutputformat.compress=true; 3)设置 mapreduce 最终数据输出压缩方式 set mapreduce.output.fileoutputformat.compress.codec =org.apache.hadoop.io.compress.SnappyCodec; 4)设置 mapreduce 最终数据输出压缩为块压缩 还可以指定RECORD set mapreduce.output.fileoutputformat.compress.type=BLOCK;--设置完毕之后 只有当HiveSQL底层通过MapReduce程序执行 才会涉及压缩。 --已有普通格式的表 select * from student_hdfs ; --ctas语句 create table student_snappy as select * from student_hdfs ; -

在实际开发中,可以根据需求选择不同的文件格式并且搭配不同的压缩算法。可以得到更好的存储效果。

--不指定压缩格式 代表什么呢? -- orc 存储文件默认采用ZLIB 压缩。比 snappy 压缩的小 STORED AS orc; --2.78M --以ORC格式存储 不压缩 STORED AS orc tblproperties ("orc.compress"="NONE"); --7.69M --以ORC格式存储 使用snappy压缩 STORED AS orc tblproperties ("orc.compress"="SNAPPY"); --3.78M

知识点13:Apache Hive--通用调优--fetch抓取机制、MR本地模式

Fetch抓取机制

-

功能:在执行sql的时候,能不走 MapReduce程序处理就尽量不走MapReduce程序处理。

-

尽量直接去操作数据文件。

-

设置: hive.fetch.task.conversion= more。、

在下述3种情况下 sql不走mr程序

--全局查找

select * from student;

--字段查找

select num,name from student;

--limit 查找

select num,name from student limit 2;

MapReduce本地模式

-

功能:如果非要执行MapReduce程序,能够本地执行的,尽量不提交yarn上执行。

-

默认是关闭的。意味着只要走MapReduce就提交yarn执行。

mapreduce.framework.name = local 本地模式 mapreduce.framework.name = yarn 集群模式 -

Hive提供了一个参数,自动切换MapReduce程序为本地模式,如果不满足条件,就执行yarn模式。

set hive.exec.mode.local.auto = true; --3个条件必须都满足 自动切换本地模式 The total input size of the job is lower than: hive.exec.mode.local.auto.inputbytes.max (128MB by default) --数据量小于128M The total number of map-tasks is less than: hive.exec.mode.local.auto.tasks.max (4 by default) --maptask个数少于4个 The total number of reduce tasks required is 1 or 0. --reducetask个数是0 或者 1

本地模式不能本质解决问题,用MR跑hive就是慢,建议切换引擎,建议用spark、tez

-

切换Hive的执行引擎

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. 如果针对Hive的调优依然无法满足你的需求 还是效率低, 尝试使用spark计算引擎 或者Tez.

知识点14:Apache Hive--通用调优--join优化

底层还是MapReduce的 join 优化

对join的优化最终都是往Map端的join靠近,避免走reduce的join

实在不行只能用reduce的join慢慢跑,至少能跑出来

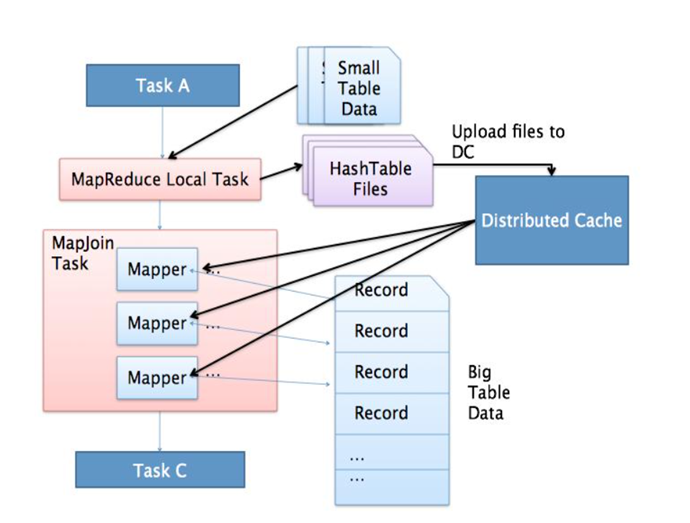

MapJoin

-

map join

-

是MAP阶段的join,没有Reduce阶段,只在map阶段完成数据join动作

-

适合于小表join大表或者小表Join小表

-

核心:必须有一张表是所谓的小表 smalltable,因为小表要放入Distributed Cache

-

所以当Distributed Cache放不下的时候就自动走Reduce段Join

-

因为没有Reduce过程,就没有了shuffle过程,效率大大提高

#是否开启自动转为mapjoin 在满足条件的情况下 默认true hive.auto.convert.join=true Hive老版本 #如果参与的一个表大小满足条件 转换为map join hive.mapjoin.smalltable.filesize=25000000 Hive2.0之后版本 #是否启用基于输入文件的大小,将reduce join转化为Map join的优化机制。假设参与join的表(或分区)有N个,如果打开这个参数,并且有N-1个表(或分区)的大小总和小于hive.auto.convert.join.noconditionaltask.size参数指定的值,那么会直接将join转为Map join。 hive.auto.convert.join.noconditionaltask=true hive.auto.convert.join.noconditionaltask.size=512000000 -

ReduceJoin

- reduce join (commonjoin)

- 适合于大表Join大表

BucketJoin

- bucket join

-

适合于大表Join大表

-

方式1:Bucktet Map Join

- 语法:

clustered by colName(参与join的字段) - 参数:

set hive.optimize.bucketmapjoin = true - 要求: 分桶字段 = Join字段 ,分桶的个数相等或者成倍数,必须是在map join中

- 语法:

-

方式2:

Sort Merge Bucket Join(SMB)桶表的升级操作(排序的桶表)- 基于有序的数据Join

- 语法:

clustered by colName sorted by (colName) - 参数

set hive.optimize.bucketmapjoin = true;

set hive.auto.convert.sortmerge.join=true;

set hive.optimize.bucketmapjoin.sortedmerge = true;

set hive.auto.convert.sortmerge.join.noconditionaltask=true; - 要求: 分桶字段 = Join字段 = 排序字段,分桶的个数相等或者成倍数

-

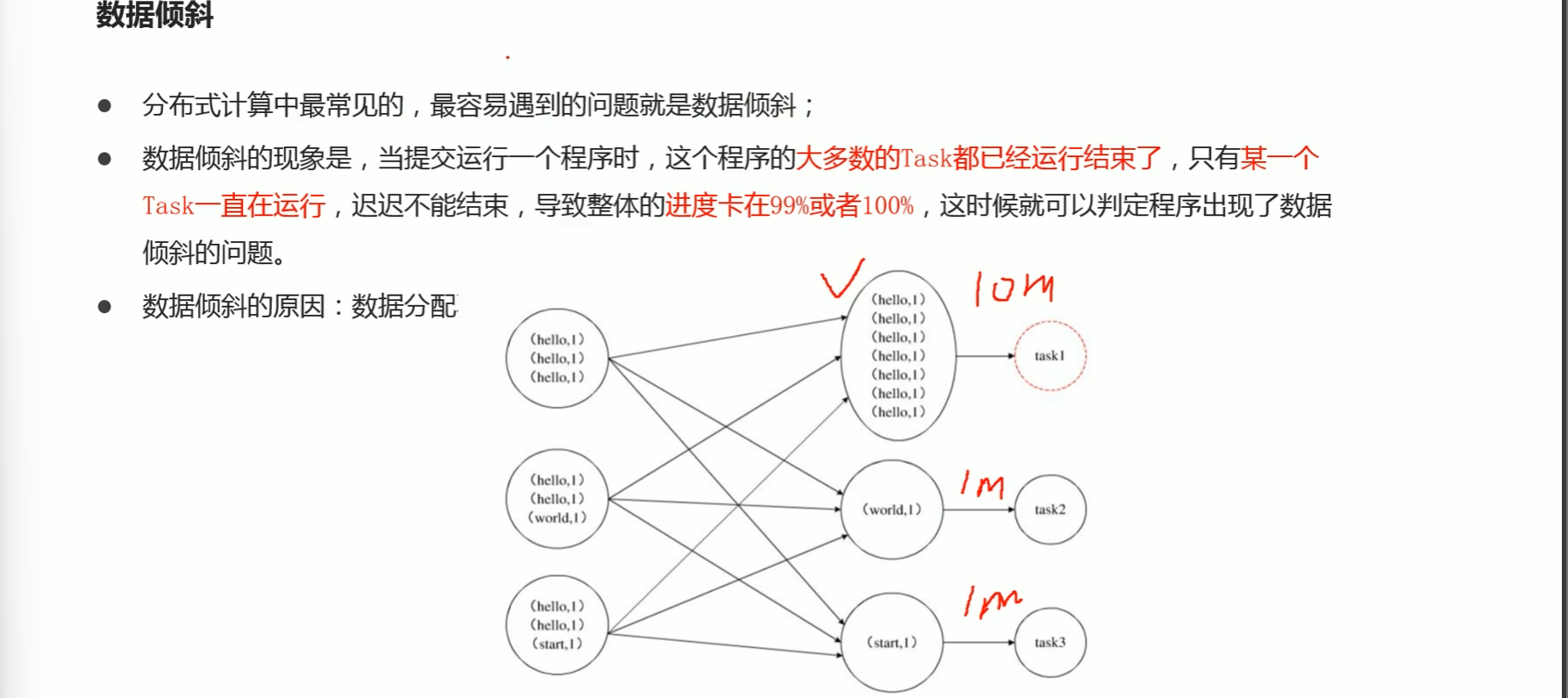

知识点15:Apache Hive--通用调优--数据倾斜优化

数据倾斜:数据分布的不平均现象

-

group by数据倾斜

-

方案一:开启Map端聚合

hive.map.aggr=true; #是否在Hive Group By 查询中使用map端聚合。 #这个设置可以将顶层的部分聚合操作放在Map阶段执行,从而减轻清洗阶段数据传输和Reduce阶段的执行时间,提升总体性能。但是指标不治本。 -

方案二:实现随机分区

实现随机分区 select * from table distribute by rand(); -

方案三:数据倾斜时自动负载均衡

hive.groupby.skewindata=true; #开启该参数以后,当前程序会自动通过两个MapReduce来运行 #第一个MapReduce自动进行随机分布到Reducer中,每个Reducer做部分聚合操作,输出结果 #第二个MapReduce将上一步聚合的结果再按照业务(group by key)进行处理,保证相同的分布到一起,最终聚合得到结果

-

-

join数据倾斜

-

方案一:提前过滤,将大数据变成小数据,实现Map Join

-

方案二:使用Bucket Join

-

方案三:使用Skew Join

#将Map Join和Reduce Join进行合并,如果某个值出现了数据倾斜,就会将产生数据倾斜的数据单独使用Map Join来实现 #其他没有产生数据倾斜的数据由Reduce Join来实现,这样就避免了Reduce Join中产生数据倾斜的问题 #最终将Map Join的结果和Reduce Join的结果进行Union合并 #开启运行过程中skewjoin set hive.optimize.skewjoin=true; #如果这个key的出现的次数超过这个范围 set hive.skewjoin.key=100000; #在编译时判断是否会产生数据倾斜 set hive.optimize.skewjoin.compiletime=true; set hive.optimize.union.remove=true; #如果Hive的底层走的是MapReduce,必须开启这个属性,才能实现不合并 set mapreduce.input.fileinputformat.input.dir.recursive=true;

-

知识点16:Apache Hive--通用调优--MR程序task个数调整

-

maptask个数

-

如果是在MapReduce中 maptask是通过逻辑切片机制决定的。

-

但是在hive中,影响的因素很多。比如逻辑切片机制,文件是否压缩、压缩之后是否支持切割。

-

因此在Hive中,调整MapTask的个数,直接去HDFS调整文件的大小和个数,效率较高。

如果小文件多,就进行小文件的合并 合并的大小最好=block size 如果大文件多,就调整blocl size

-

-

reducetask个数

-

如果在MapReduce中,通过代码可以直接指定 job.setNumReduceTasks(N)

-

在Hive中,reducetask个数受以下几个条件控制的

(1)每个 Reduce 处理的数据量默认是 256MB hive.exec.reducers.bytes.per.reducer=256000000 (2)每个任务最大的 reduce 数,默认为 1009 hive.exec.reducsers.max=1009 (3)mapreduce.job.reduces 该值默认为-1,由 hive 自己根据任务情况进行判断。 --如果用户用户不设置 hive将会根据数据量或者sql需求自己评估reducetask个数。 --用户可以自己通过参数设置reducetask的个数 set mapreduce.job.reduces = N --用户设置的不一定生效,如果用户设置的和sql执行逻辑有冲突,比如order by,在sql编译期间,hive又会将reducetask设置为合理的个数。 Number of reduce tasks determined at compile time: 1

-

知识点17:Apache Hive--通用调优--执行计划

-

通过执行计划可以看出hive接下来是如何打算执行这条sql的。

-

语法格式:explain + sql语句

-

栗子

explain select * from student; +----------------------------------------------------+ | Explain | +----------------------------------------------------+ | STAGE DEPENDENCIES: | | Stage-0 is a root stage | | | | STAGE PLANS: | | Stage: Stage-0 | | Fetch Operator | | limit: -1 | | Processor Tree: | | TableScan | | alias: student | | Statistics: Num rows: 1 Data size: 5260 Basic stats: COMPLETE Column stats: NONE | | Select Operator | | expressions: num (type: int), name (type: string), sex (type: string), age (type: int), dept (type: string) | | outputColumnNames: _col0, _col1, _col2, _col3, _col4 | | Statistics: Num rows: 1 Data size: 5260 Basic stats: COMPLETE Column stats: NONE | | ListSink | | | +----------------------------------------------------+

知识点18:Apache Hive--通用调优--并行机制、推测执行机制

-

并行执行机制

-

如果hivesql的底层某些stage阶段可以并行执行,就可以提高执行效率。

-

前提是stage之间没有依赖 并行的弊端是瞬时服务器压力变大。

-

参数

set hive.exec.parallel=true; --是否并行执行作业。适用于可以并行运行的 MapReduce 作业,例如在多次插入期间移动文件以插入目标 set hive.exec.parallel.thread.number=16; --最多可以并行执行多少个作业。默认为8。

-

-

Hive的严格模式

-

注意。不要和动态分区的严格模式搞混淆。

-

这里的严格模式指的是开启之后 hive会禁止一些用户都影响不到的错误包括效率低下的操作,不允许运行一些有风险的查询。

-

设置

set hive.mapred.mode = strict --默认是严格模式 nonstrict -

解释

1、如果是分区表,没有where进行分区裁剪 禁止执行 2、order by语句必须+limit限制

-

-

推测执行机制

- MapReduce中task的一个机制。

- 功能:

- 一个job底层可能有多个task执行,如果某些拖后腿的task执行慢,可能会导致最终job失败。

- 所谓的推测执行机制就是通过算法找出拖后腿的task,为其启动备份的task。

- 两个task同时处理一份数据,谁先处理完,谁的结果作为最终结果。

- 推测执行机制默认是开启的,但是在企业生产环境中建议关闭。